"Why don't you publish them on a peer reviewed forum...

... if your findings are so important?" TL;DR - it slows everything down

One of the reasons people often dismiss vaccine critical Substack articles is because of the lack of peer review.

In fact, during normal circumstances I would agree with this objection.

There is an inherent absence1 of quality control when someone chooses to publish some finding on Substack instead of a peer reviewed forum. But it is still the fastest way to get things out to the public, and it is especially important during this era where we have an out of control FDA approving new vaccines at a breakneck pace, as if they're racing against time (Are they?)

Another great article by Jeff Tucker:

That said, the release of yet another shot, implausibly called NexSpike, especially in light of all evidence and promises, is a tremendous shock for which no one was prepared. If they were in the works and the appointees could not stop them, we should be told that and the full explanation should be given to all. If President Trump himself is still attached to the foul spawn of Operation Warp Speed, and has forced them back onto the market despite vast public opposition, we should know that too.

Above all else, what we really need is the blunt truth about the last five years. We need to know that the people in office, whether elected or appointed, still share the deep outrage that fueled the movement that put them in power. We need to hear frank talk about the harms, the mandates, the suffering, the deceptions, the payoffs, the graft, the abuses, the illegal vanquishing of freedom, science, and human rights.

Specifically, there are four things which make peer review forums a bad venue for this kind of work

Publication delay

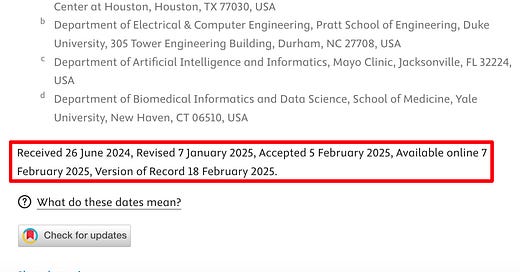

Consider this paper which is probably a reasonably good representation of the kind of paper I would try and publish.

Notice the publication delay - paper was received on 26 Jun 2024 and was only made available in Feb 2025. This is a really long delay for what I would consider a very urgent matter.

Jargon

Here is the paper abstract:

Adverse event (AE) extraction following COVID-19 vaccines from text data is crucial for monitoring and analyzing the safety profiles of immunizations, identifying potential risks and ensuring the safe use of these products. Traditional deep learning models are adept at learning intricate feature representations and dependencies in sequential data, but often require extensive labeled data. In contrast, large language models (LLMs) excel in understanding contextual information, but exhibit unstable performance on named entity recognition (NER) tasks, possibly due to their broad but unspecific training. This study aims to evaluate the effectiveness of LLMs and traditional deep learning models in AE extraction, and to assess the impact of ensembling these models on performance.Here is an AI generated layperson summary

Paper Title: Making Smart Computer Programs (AI) Better at Finding Key Information: A Look at How They Extract Side Effects from Different Online Sources

I. What This Study Is About (Introduction & Objective)

The Challenge: It's really important to quickly find information about side effects ("adverse events" or AEs) of medicines, especially vaccines, from text data (like reports or social media posts).

Current AI Tools:

"Deep Learning Models" (a type of AI) are good at finding patterns in text but need a lot of examples to learn from.

"Large Language Models" (LLMs) like GPT are great at understanding what text means, but they aren't always consistent when trying to pick out specific bits of information (like names of drugs or symptoms).

Our Goal: To see how well both these types of AI (LLMs and deep learning models) perform at finding vaccine-related AEs in text, and whether combining them ("ensembling") can make them even better.

II. How We Did the Study (Methods)

Our Data: We used a mix of text data from:

VAERS (Vaccine Adverse Event Reporting System): Official reports from the U.S. government (621 reports).

Social Media: Posts from Twitter (9,133 tweets) and Reddit (131 posts) about COVID-19 vaccines.

What We Looked For: We trained the AI to identify three specific types of information ("entities") in the text:

"Vaccine" (e.g., "Pfizer vaccine")

"Shot" (e.g., "first dose")

"Adverse Event" (e.g., "fever," "headache")

Our AI Models:

We tested several well-known LLMs: GPT-2, GPT-3.5, GPT-4, and Llama-2 (two versions).

We also tested traditional deep learning models: "Recurrent Neural Network" (RNN) and "BioBERT" (an AI model specialized for medical text).

Making Them Smarter ("Fine-tuning"): We further trained most of these AI models on our specific data to make them better at finding the exact information we needed.

Combining Strengths ("Ensembling"): We took the three best-performing individual models (fine-tuned GPT-3.5, RNN, and BioBERT) and combined their predictions using a "majority vote" system. This is like getting multiple experts to weigh in and choosing the most common answer.

How We Measured Success: We used a standard measure called "F1 score" to see how accurately the models identified the entities, both for exact matches and for close matches. We also checked how consistently human experts agreed when labeling the data.

III. What We Found (Results)

Human Agreement: Our human experts agreed very well on how to label the "vaccine" and "shot" entities, and moderately well for "adverse event" entities, showing our training data was reliable.

The "Ensemble" Model Was Best: The combined AI model (the "ensemble") consistently performed the highest across all types of information we were looking for:

"Vaccine": Identified with 87.8% accuracy.

"Shot": Identified with 93.0% accuracy.

"Adverse Event": Identified with 92.5% accuracy.

Overall: The ensemble model had an impressive 90.3% accuracy for exact matches and 92.6% for relaxed matches.

Training Helps: AI models that were "fine-tuned" on our specific data performed much better than those used without extra training.

Combining Works: While LLMs alone sometimes didn't do as well as traditional deep learning models for this task, putting them together in an "ensemble" significantly boosted the overall performance.

IV. What It All Means (Conclusion)

Key Takeaway: Our study clearly shows that combining fine-tuned Large Language Models and traditional deep learning models is a powerful and reliable way to automatically extract information about side effects from diverse text sources, including social media.

Why It Works: LLMs are great at understanding the natural language of social media, and deep learning models are strong at recognizing specific patterns. Combining them makes them more robust by covering each other's weaknesses.

Impact: This improved accuracy in finding side effects in text can greatly help medical professionals make better decisions and enhance overall drug safety monitoring.

Next Steps: Future work should focus on making LLMs even better and using even more varied data to ensure these methods can be applied broadly to other medical areas.This is much better but you cannot get anything published if you skip the jargon2.

Does it contribute anything to the literature?

My typical article would not really be considered real computer science - in the sense that it contributes something3 to the existing literature.

The utility of vaccine injury dataset analysis is pretty obvious - but that does not make it novel in the sense of publishing papers.

Lack of real world feedback

I often get comments on this Substack which leads me to investigate other stuff.

The best example of this was the comment I received from Devon Brewer about followup reports always being deleted.

This led to the publication of many followup articles at a rate which simply would not have even been possible on peer reviewed forums, turning it into a (somewhat) real time discussion.

I don’t think you can get this kind of feedback during peer review, and even if you do, there simply won’t be any sense of urgency and the feedback loop might never be closed

To summarize - in my opinion, the fastest way to do these investigations is to keep publishing them on Substack.

Not that there is much quality control when the CDC chooses to publish in its mouthpiece MMWR. In fact, some of their publications go unchallenged even in regular journals - for example no reviewer asked the CDC why they did not do proper text mining of v-safe free text data.

But two wrongs don’t make a right.

In defense of the jargon, at least in computer science jargon is just a way to transmit information in a compact form. Building consensus on the jargon is itself part of the training in computer science. I don’t have anything against jargon per se, but such papers are quite unsuitable for the task of warning people about all the safety signals seen in vaccine injury datasets

Maybe I might be able to find something “novel” but remember that any new idea needs to be evaluated against other existing ideas, which adds significant overhead.

My peers are those who think, and much of my work is available on ResearchGate where everyone can comment.