CDC's v-safe text mining is laughable

The Case for Vaccine Data Science - Part 6

This is Part 6 of my Case for Vaccine Data Science series.

I was reading a paper about CDC’s v-safe free-text-response analysis, and it is just laughable.

First, remember that all the “solicited” responses were just check-the-box fields for known, benign vaccination side-effects and any other adverse reaction was only captured by the free text responses in v-safe.

And the Oracle v-safe team said as late as in Feb 2023 that they have not yet analyzed it.

Not to worry, because the CDC was on the case!

“Success! We looked for nothing and we found exactly nothing!”

That’s the only way I can describe it.

Here is how they describe their text mining approach (emphasis mine):

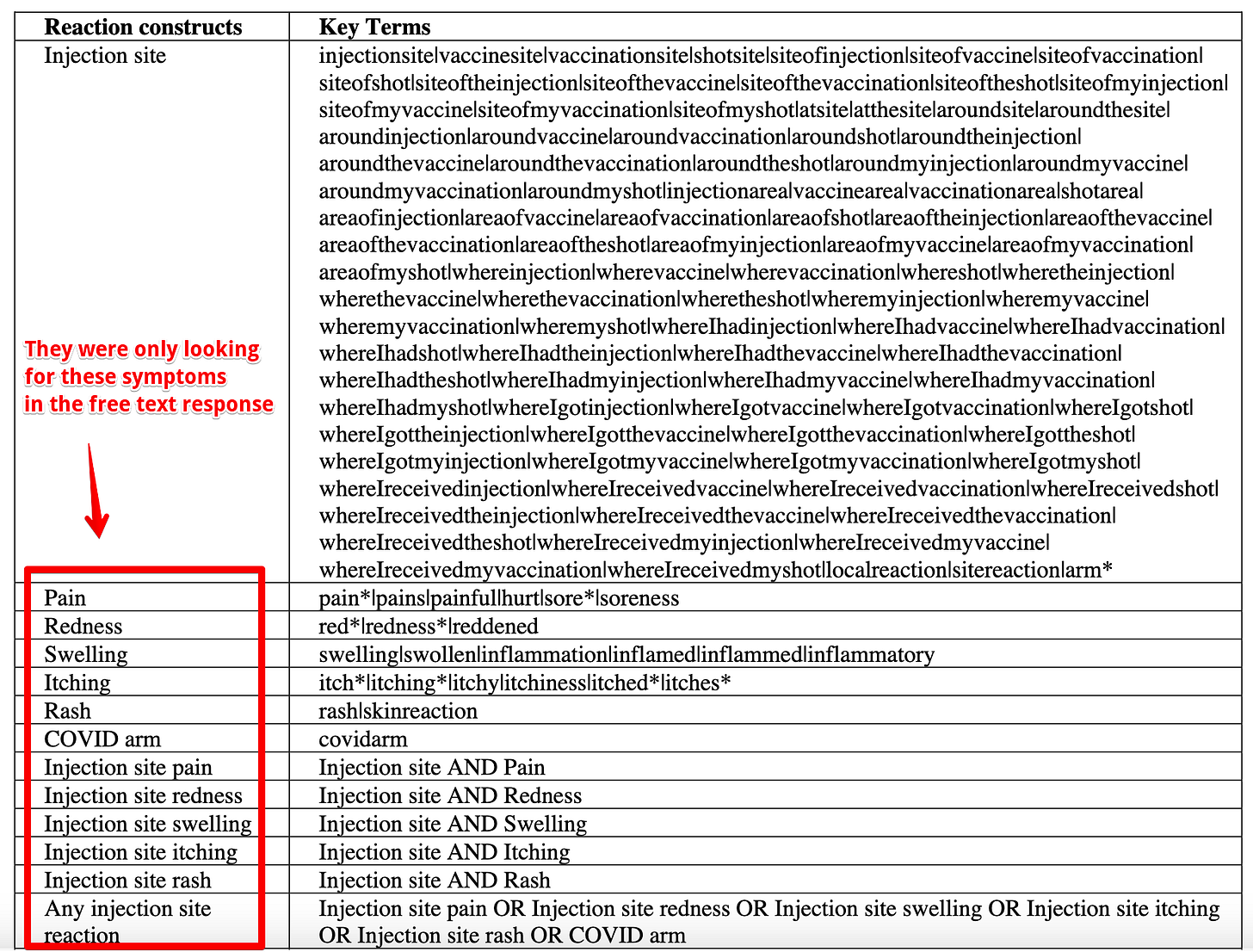

Free-text responses from participants who reported new or worsening symptoms on day 14 post-vaccination (dose 1 n = 171,003; dose 2 n = 74,603) were analyzed using text search methods in SAS (e.g., prx functions) [12]. Detailed methods are described in the Supplemental Material—Section B. In brief, to align free-text responses on the day 14 surveys with the local and systemic reactions solicited in day 0 to 7 surveys, we created a list of key terms to define each reaction (e.g., injection site pain, fatigue, headache). Special characters and spaces were removed from text strings prior to the case-insensitive search; for some key terms that could be common roots for words unrelated to the reaction of interest (e.g. “red”), the unmodified text string with spaces was used and a word boundary rule was applied (i.e., a non-word character or beginning or ending of the string was required before and after the key term). We created binary variables for each reaction using text search functions.

So they tried to “align” the free-text responses with the local and systemic reactions.

In other words, they tried to mine the free text responses based on the known but benign list of check-the-box reactions? And what exactly is the point of doing that?

At first, I could not actually believe this. I wondered if they failed to mention something, or maybe I just missed something very obvious.

And then I looked at the Supplementary Appendix and confirmed that all they did was to map the free text response to the already known benign symptoms!

Here is the process they used.

So they used regular expressions over strings (which was probably the state-of-the-art in text mining in the year 2005) to “confirm” that they were able to map the free text responses to the actual check-the-box symptoms.

What about the other symptoms?

Well, since they never tried to even look for the unsolicited symptoms, I am happy to report that they were 100% successful and did not find anything else!

They were even very proud of it, and mentioned it in the Limitations! (emphasis mine)

Lastly, the local and systemic reactions described in this report did not include more rare and severe adverse reactions not intended for identification in v-safe data. A report using Vaccine Adverse Event Reporting System data has already estimated that rates of severe allergic reactions and anaphylaxis were 2.5 and 4.7 cases per million doses administered for the mRNA-1273 and BNT162b2 vaccines, respectively [26].

So if it is not intended for identification in v-safe, does that just mean they never happen?

And if that is actually true, what was it that you were saying again, Dr David Gorski?

If your child, for instance, has a fever for a day after a vaccine, are you going to bother to report it to VAERS? Probably not. However, if someone dies soon after a vaccine, you can be damned sure that it will very likely be reported, particularly given the government’s implementation of V-Safe, its text messaging system that follows up COVID-19 vaccination with text messages asking if you’ve had any symptoms since vaccination and reminding you to report them to VAERS if you did. In other words, the more serious the AE, the more likely it is to be reported to VAERS, particularly with the reminders people who opted into V-Safe have been receiving.

Ahh, right.

This reminds me of the old joke about how “Absence of evidence is not the same thing as evidence of absence”. I wish someone could explain this phrase to the brilliant folks at the CDC some day.

Time for the spiderman meme.

So what about that pesky myocarditis thingy which seems to be causing minor problems like fatal cardiac arrests? What about GBS, or Bells Palsy, tinnitus etc?

Nothing! Not one!

But I do understand the philosophy here.

You see, if you already know that you have a “safe and effective” vaccine, why would you waste your time trying to actually prove it?

So I constructed a dataset for the serious VAERS reports from v-safe to check.

You can see that there are a total of nearly 6000 rows in the dataset.

However, remember that there could be multiple rows in the SYMPTOMS CSV file for a given VAERS_ID, so the total number of unique reports is smaller than the size of the dataset.

In fact, the total number of unique reports is only about 2500.

What this means is that on average, there are actually more than 2 rows worth of symptoms in the SYMPTOMS CSV file for each serious VAERS report from v-safe.

Since a row can accommodate a maximum of 5 symptoms, this means on average, there are more than 10 symptoms reported on average for each of these 2500 reports.

This looks like a lot of potential AEs that CDC could have tried to find when it did the v-safe free text analysis.

Just to help them further, I will do some searches to see if they might have missed some important, known severe adverse events.

List of known, omitted severe adverse events

Of course, it can be very hard to come up with a list of keywords to search for if you don’t really know what you are looking for. That’s just how it is1.

So I have decided to be extra super helpful.

In a previous article, I mentioned that v-safe decided to omit a list of symptoms from their initial draft and add only the known, benign ones into the v-safe check-the-box list.

Here is the omitted list:

I suppose they could have at least looked for one of these symptoms in the text fields?

I wonder if they might have some incentive to ignore them?

Myocarditis and Pericarditis within 2 weeks of vaccination

The paper restricts the study to symptoms which occured within 2 weeks of vaccination, which is arbitrary and less than what CDC itself considers standard which is about 6 weeks.

But let us just stick to that timeline.

The paper was written in Oct 2021, so let us restrict the analysis to reports received before that date, and look at myocarditis and pericarditis reports.

There were still 12 reports which qualified despite so many filters.

Here are a list of other issues I found based on the omitted list of check-the-box options

Note: I am not using the 14 day window for these, but just providing the full list based on the omitted symptoms, based on the VAERS report RECVDATE being earlier than ‘1 Oct 2021’, meaning these reports were already in the system (despite any throttling delays) and should have been available for the CDC to analyze.

Acute Myocardial Infarction

Guillain Barre Syndrome

Myocarditis or Pericarditis

Narcolepsy or Cataplexy

Placenta-related issues

Seizures/Convulsions

Did this actually require text mining?

What is even more interesting is that there are only a handful of such reports, meaning there wasn’t even any need for “text mining”. Someone could have manually done a simple keyword search across all the reports, and then added them into the publication.

While there will be some pushback that the total number is anyway very small compared to the number of vaccine doses, that pushback completely misses the point.

a) All these reports were received before the paper was published

b) Most of them happened within a few days of vaccination

c) All of these were severe AEs which were already listed in the clinical trial documents

In my view, the most problematic thing about these kinds of publications is that they provide a false sense of security to the Doctors and researchers who read them and think they contribute to the body of knowledge which “prove vaccine safety” even though this paper does nothing of that sort.

And not even looking out for these AEs in your free text analysis, if only to point out the total number and add some blurb about how it was not statistically significant, makes it much harder for people to trust the CDC.

You might be thinking I am just stating the obvious given that you cannot find an adverse event if you do not know the right keyword to use. But the field of Machine Learning has now advanced so much that you can actually find at least some of these symptoms without knowing the right keywords to use. The “string regular expression” approach used by the paper to analyze the free text entries also tells me that even the reviewers of these CDC papers are clueless about recent advancements in Natural Language Processing.

Almost like it was deliberate right? So upsetting. This is one of the most common ways the cdc obfuscators use to make signals disappear-by counting highest reported single AEs. Of course it’s the mildest because these are the most common. So they get this inane list of AEs and ignore the more severe.