Three ways to improve v-safe analysis

And why it has been so hard to prove or disprove claims from EITHER side

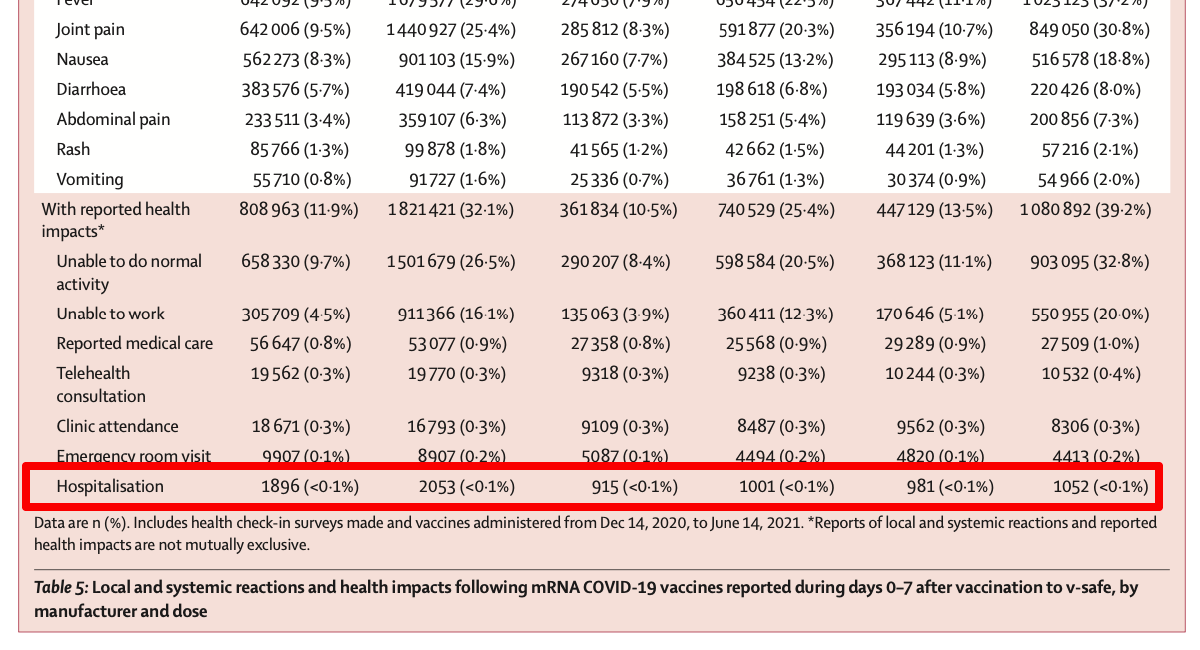

In my previous article I mentioned that the hospitalization rate of v-safe registrants was not likely to be as high as what the folks at ICAN are saying, nor as low as what the CDC is claiming.

Were fewer than 0.1% of v-safe registrants hospitalized?

A few weeks back I wrote that Debunk the Funk Dan Wilson had mentioned the following in his video about the ICAN lawsuit:

But why is it so difficult to just look at the data and do the calculation?

There are three reasons - the first one is that anyone could sign up for v-safe, making the denominator much, much larger than the 10 million people who actually registered. The second reason is that it is quite hard to visualize the v-safe data because each registrant has their own timeline1. The third reason is that the sheer size of the dataset (such as the nearly 150 million rows in the consolidated checkin CSV file) makes it quite hard to run aggregate queries.

I suggest three approaches to solve these problems.

1 Use “vaccine enthusiast” datasets

I have discussed this in a previous article. Vaccine enthusiasts are people whose first checkin says they have had no problem at all after taking the vaccine. In other words, they did not choose to sign up after the fact only because they had an adverse event.

You can further refine this by creating smaller subsets which are comprised of people who took only Pfizer, or only Moderna. This means we omit people who mixed the two brands (which turns into another confounder).

There are over a million people in both these cohorts, so we will still be doing our analysis over fairly large datasets.

2 Create a timeline visualization tool

I made the following claim in my previous article:

What the query tells us is that there were over 8700 registrants out of that 1.3 million who were fine on the day of vaccination, who mentioned “Hospitalization” in one of their v-safe checkins on a future date.

You can of course take my word on it, but wouldn’t it be much better if I gave you a list of registrant IDs for the 8700, and you could plug it into a tool and verify for yourself that

a) these people are vaccine enthusiasts2

b) they got only the Pfizer vaccine

c) and they mentioned Hospitalization as the outcome in one of their checkins?

I am in fact working on such a tool and I will be discussing it in more detail in the next article.

Here is a preview of what the tool will look like:

3 Construct data subsets for further analysis

Once you have a way to view the timeline of a v-safe registrant, and smaller cohorts to work with (e.g the “vaccine enthusiast” cohort) it becomes possible to construct data subsets to help us find specific patterns3.

For example, of the 8700 people in the vaccine enthusiast Pfizer cohort (VEPC) who were hospitalized, how many had provided a free text entry?

And what were the most common MedDRA lower level terms?

How many of those were followed up by the v-safe call center employees?

What was the outcome of the calls?

I am working on creating these data subsets and will be publishing them in future articles.

What about free text analysis?

My three suggestions above do not involve any analysis of the free text entries.

But even after you do all this work, sometimes you do need to analyze the free text to get more insight into what happened4.

However, the raw data (minus the free text analysis) is often sufficient to point out errors in CDC papers. And if the free text analysis modifies the analysis in some important way, that is all the more reason to promptly release the information, and request the NLP community5 to help with the task!

In other words, even if the free text analysis ends up giving MORE benefit of the doubt for the vaccine, it is important that

a) the CDC told us that’s what happened

b) and they published the data for us to confirm that the CDC is not manipulating the data in any way

Someone might ask “Don’t you trust the CDC to do its job? Why do you think they are manipulating information?”

After my VAERS analysis, I don’t trust the CDC is doing its job well.

And suppressing the v-safe data6 and slow-walking its release is definitely not helping the CDC gain any trust.

And this matters because this actually makes the visualization a bit more challenging to design. You will have to choose between a consistent interface with plenty of white space, or an information-dense interface which will not be consistent across all registrants and thus a bit harder to follow. I chose the former approach for my visualization tool.

You can verify this by looking at their checkin for Day 0 of the first dose

You can also construct these data subsets without having a timeline visualization tool, but I think it would be much harder for the programmer to debug errors, as well as for the audience to cross-verify the numbers

And there are now ways to use LLMs like GPT to help speedup the task

Why request external help? Can’t the CDC do it themselves? I am not sure if the lack of text analysis skillsets among CDC employees is intentional or accidental, but you can read this article about v-safe text mining to understand just how bad it is. Besides, there have also been some important improvements since the Lancet paper was written, such as the release of ChatGPT, which can help speed up this analysis.

Remember, it took a FOIA request to get the CDC to release the v-safe data, and they still withheld the free text entries. Then it took yet another FOIA request to get the free text entries, but this time they have withheld the dates associated with the free text entry submissions!