Why all research papers based on VAERS are wrong about vaccine safety

They all ignore followup reports and often understate COVID19 vaccine dangers

Last week, I wrote this in my article:

It is my view that if you move from looking at only the deleted death reports, to also taking into account serious adverse events, and then also taking into account the full narrative text of both the original and the followup report, the sum total analysis you can potentially do will end up being drastically different.

Interestingly, I then stumbled into a paper about Guillain–Barre Syndrome (GBS) which turned into the perfect case study for this.

I used LitCovid to search for recent VAERS papers (these are papers which have passed peer review and also hand curated by the LitCovid team to be of acceptable standard)

Discussion of the Chalela et al paper

I have to give credit where credit is due.

The paper is actually written quite well. I was able to read the full paper without much difficulty.

I noticed that they filtered for all the VAERS reports which were filed in 2021 and selected reports where the MedDRA symptoms (from the VAERSSYMPTOMS.csv file) came from the following list:

Based on these Symptom names, they identified 815 reports. I used the same and identified 796. I am probably missing some terms (for e.g. I could not find anything matching “Guillain-Barre syndrome variant” in the entire database, maybe they use a different name for it during their search).

Then they actually read the narrative text (which other papers rarely do) and also assigned categorical values (0, 1 and U for unknown) to each report based on the following information:

Variables not prepopulated but obtained from the free-text description of the individual that filed the report included the following: need for ICU, need for mechanical ventilation, recent diarrhea, recent COVID-19 infection, re- cent upper respiratory infection, use of plasmapheresis (PLEX), infusion of intravenous immunoglobulin (IVIG), whether spinal magnetic resonance imaging (MRI) was performed,enhancement of spinal roots on MRI, whether a lumbar puncture (LP) was performed, elevated protein levels in the cerebrospinal fluid, whether a nerve conduction study (NCS) was performed, abnormal NCS findings consistent with GBS/V, and neurologic consultation. Categorical variables were entered into a database as 0 for absent, 1 for present, and U for unknown.

And then they asked some independent experts to evaluate whether the report was actually GBS based on the information in VAERS, and also used some tiebreaking rules.

The VAERS query was reviewed by four neurologists who extracted the variables and added them to a database. Cases in which the reviewers were uncertain about the diagnosis were independently reviewed by three neurologists to arrive at a consensus about the diagnosis.

You can read the full paper to see the Results and the Conclusions. I did not find anything odd about the results or conclusions, but do keep in mind I don’t have a background in medicine or biology.

What happened to the followup GBS reports?

Based on the public facing data which is already available in VAERS, I do think the authors did the best job they could.

But I have already discussed how all followup reports are deleted from VAERS.

The important question to ask is:

“Does the paper understate COVID19 vaccine dangers?”

I think the answer is Yes, and I will explain why in the rest of this article.

Identification of duplicates

I first created a system to identify duplicates (i.e. match the original and the followup report).

I started with the deleted CSV file from the VAERS Analysis website and searched the narrative text for the following keywords: guillain, neuropathy, fisher and also those reports which had all the necessary information like age, state and vax_date (otherwise it is much harder to run the deduping algorithm)

select * from deleted_feb_2023

where (lower(symptom_text) like '%guillain%'

or lower(symptom_text) like '%neuropathy%'

or lower(symptom_text) like '%fisher%')

and age_yrs is not null

and vax_date is not null

and state is not null

and recvdate::date between '2021-01-01'::date and '2021-12-31'::dateThis gave me a list of 287 deleted rows related to GBS. This might have omitted some reports, but the current list is sufficient for our analysis.

Then I matched each of these 287 rows with the existing VAERS DATA file for the year 2021 based on the following parameters:

AGE_YRS

SEX

STATE

VAX_DATE

If this produces more than 1 “candidate”, I will check to see if

a) the candidate has at least 1 symptom from the GBS list in Table 1

b) the candidate shares at least 1 symptom with the deleted report

If there are many such candidates, the one which shares the most number of symptoms with the deleted report is considered the best match

Note: Most of the time, there is exactly 1 candidate which has many shared symptoms with the deleted report.

Next, I run the same comparison algorithm I have used for my previous analyses of deleted reports to compare the deleted report and the retained report.

In another article I had written before, I pointed out that a lot of the deleted reports became “less complete, less serious or less conclusive”.

Here I will categorize my finding along these three axes, and you will see quite easily just how much important information is lost when the followup reports are ignored.

Note: of the total of 287 reports, there were some duplicates (with the same VAERS_ID) in the original Excel file. After I removed them, there were 238 unique VAERS_IDs.

So for the rest of this analysis I will use 238 as the denominator.

Less complete

Matching parameters

Of the 238 deleted records which had all the information about age, sex, state and vax_date, I could not find any matches for 75 of them, and was able to successfully find the match for 163 reports.

In my previous article, I pointed out that a group of VAERS reports become “less complete”. Now since I did not understand the “keep the first report” rule at first, I will now change this wording to say “followup reports become MORE complete” :-)

But the idea is still the same.

Since we are working with such a small set of reports, I was actually able to get an example of this to explain what I meant by “less complete”.

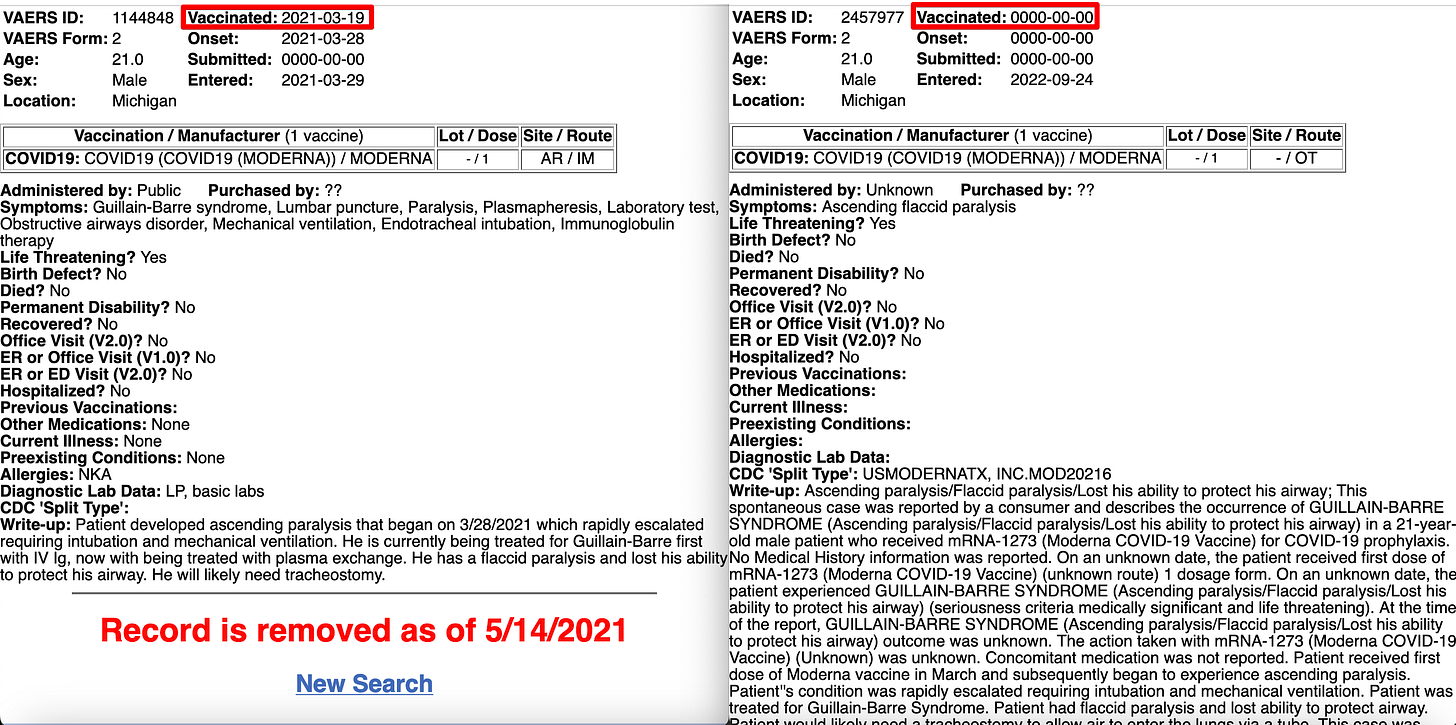

Let us compare VAERS reports 1144848 and 2457977

The one on the left is the deleted (follow up) report while the one on the right is the retained (original) report. As you can see, the deleted (followup) report has the vaccination date, while the retained (original) report does not! In turn, this means while it would have been possible to calculate days to symptom onset using the followup report, this was not possible using the original report.

In other words, the original report is “less complete” than the deleted report.

The problem is that this incomplete information also makes it much harder to be certain about duplicates. I can still look at these two reports manually and see they are referring to the same patient, but till now there isn’t a foolproof way which does not produce a few false positive matches.

So even though I am not able to identify the original report for which the duplicate report was filed, I can say based on the search algorithm that the deleted followup report was “more complete” than the retained original report.

Categorical variables

There is another type of information which can be called “less complete”

As I wrote earlier, in the article the authors mention reading the narrative text to mark categorical variables. I looked at the narrative text for matching reports (these are cases where I have been able to identify duplicates) and see if the keywords that the authors are looking for is present in the followup report but is NOT present in the original report.

This is the list of keywords I came up with.

keyword_list_to_compare = [('ivig', 'intravenous immunoglobulin'),

(' icu', 'intensive care unit', 'intensive care'),

'mechanical ventilation', 'diarrhea',

('plex', 'plasmapheresis'),

('spinal magnetic resonance imaging', 'spinal mri'), 'spinal roots', 'lumbar puncture',

'elevated protein', ('nerve conduction study', 'ncs'), 'neurologic consultation']Note that I had to group keywords together sometimes because they are synonymous.

You can go to the list of matches and filter for the following:

Set FLAG = ‘⚠️‘ and FIELDNAME starts with ‘HASKEYWORD_”

Here is the list of results

Let us consider the first row: 1086367 and 1116613

Once again, the deleted report is on the left and the retained report is on the right.

As you can see, not only is the deleted followup report a lot more descriptive, there is no mention of IVIG at all (one of the things the authors were looking for) in the retained original report. So that means this particular report was probably misclassified by the 4 people who reviewed this case.

If you go through the filtered list as I explained before, you can see 21 such cases (note: not all are unique reports, there could be some repetitions).

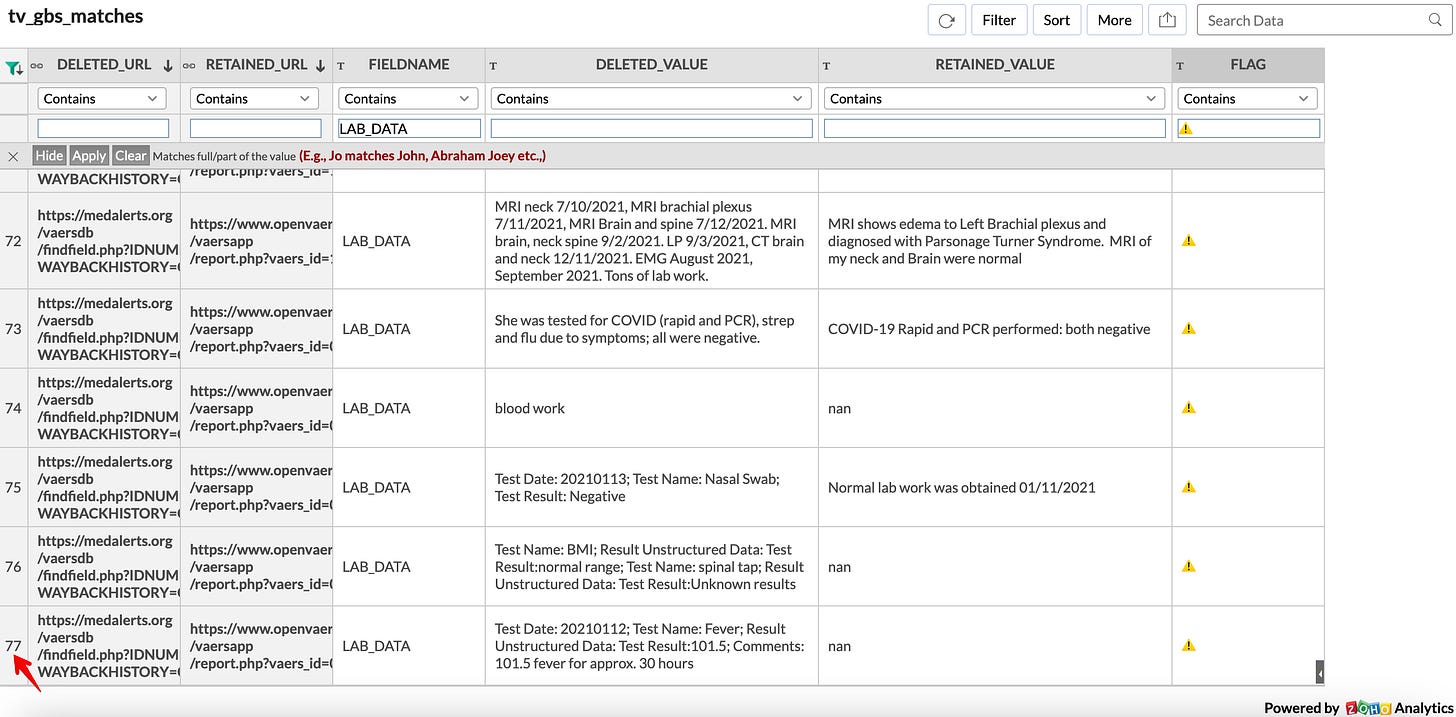

LAB_DATA

I have also added a comparison between LAB_DATA for the original and the followup report.

If I filter based on just the length of the original vs followup report, there are 77 reports.

Now the diagnostic lab data is interesting in that you cannot be sure more text automatically means the followup report is more complete or more useful. I have written about extracting information from the lab data previously, so perhaps I might extend this analysis in the future if/when I understand what diagnostic test is more vs less important :-)

But you can see that the RETAINED_VALUE is just nan in many cases (which means no diagnostic data). So let us filter for those.

In at least 26 reports, there is some diagnostic lab data in the followup report which wasn’t in the original report. Would this have changed the opinion of the expert reviewers?

Less conclusive

SYMPTOM_TEXT

Now let us discuss reports which become less conclusive.

If the SYMPTOM_TEXT field is at least 1.5 times as long in the deleted report I flag it in the table. Generally speaking, this means the followup report is more informative than the original report (as you would expect).

You can see that this happens for 131 reports. Considering that I was able to match only 163 out of the 238 reports, this means around 80% of the matched followup reports were more descriptive than the original report.

And when there is more information available to read, this means it is easier to classify a report.

While I don’t have any way to know for sure if all the 132 were actually GBS cases, it is clear that the 4 reviewers did not have the complete information for a fairly large number of the 815 reports that they reviewed.

ONSET_DATE

One more surprising thing I found was how often the date of symptom onset was a bit earlier in the followup reports. It happened in 44 reports.

(You can simply filter for FLAG=’⚠️’ and FIELDNAME=’ONSET_DATE’)

Let us consider the implications of this.

Clearly, the authors considered this an important metric (maybe THE important metric) in their calculations. This becomes clear from reading the abstract.

In at least 44 followup reports, the date of onset was reported to be at least a day earlier than what was provided in the original report.

And there were quite a few reports where the date of symptom onset differed by a week. How many of these fell outside the expected timeline? Would it have made a difference to the final result?

(Note: the authors did not make the VAERS_ID of the 815 reports public. So there is no way for me to compare. But I would expect that it would have brought at least a handful of cases within the “expected timeline” window)

Less serious

If you filter for one of the following:

FIELDNAME = 'HOSPITAL' OR 'ER_VISIT' OR 'L_THREAT' OR 'DISABLE'

you will find that another 37 reports became more serious in the followup report.

This is important information that the authors are probably not aware of if they saw only the original reports.

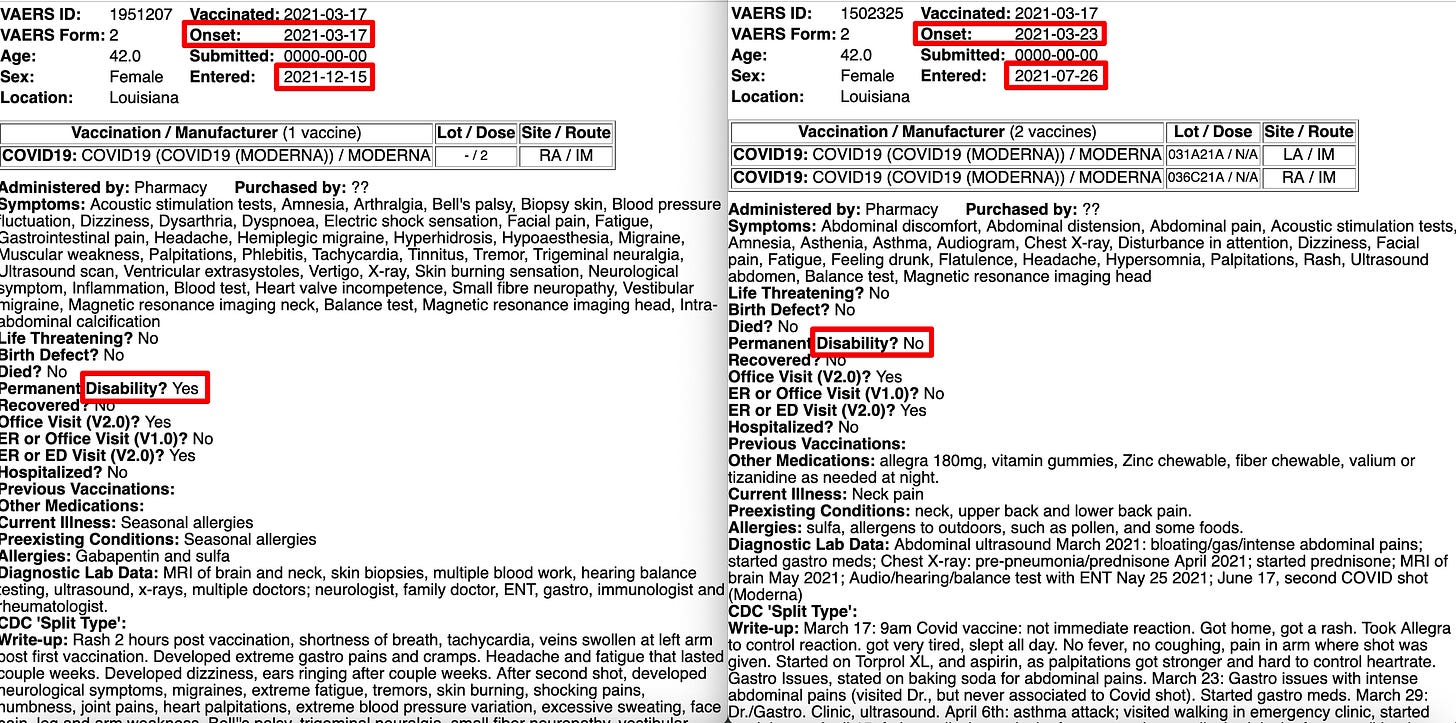

Here is a good example: 1951207 vs 1502325

Once again, the one on the left is the deleted report and the one on the right is the retained report.

The first thing you will notice is that even though the write-up clearly states in the original report that this person got a rash right after injection, the date of onset was coded as only 6 days later.

The second thing you notice is that the ‘Permanent Disability’ has been updated from ‘No’ to ‘Yes’

The third thing you will notice is that the total number of symptoms has increased, and in fact includes “Heart Valve Incompetence” and “Tachycardia”

Finally, the followup report was filed almost 5 months after the original report, giving the patient plenty of time to recover. But from the report, it looks like her condition worsened.

The question is: without the full, updated report, how much could the experts have inferred from just the original report?

Does this change the overall results in the paper?

As I mentioned before, unless the authors release the list of 815 reports, it is not possible to say if this changes the overall results or the conclusions of the paper.

To their credit, the authors do not write an unrealistic conclusion which does not match the analysis. But it is very clear that if the authors also had access to the followup reports, they would have been able to do a far more thorough analysis.

As I mention in the title, in my view all external research papers (that is, those not written by the CDC1) based on VAERS are wrong about vaccine safety.

This is all the more true if they actually use the narrative text in their analysis, because the follow up reports are often much more descriptive and often provide more pertinent information than the original.

This is not to imply the papers written by CDC are right, just that there isn’t any way to be certain as it is not a level playing field

This is good info.

Might I suggest a TLDR or in academic terms an abstract / Findings section at the top of your posts? Most people won't read extensive processes of data analysis for various reasons (time, lack of expertise, too much info) but would benefit from knowing conclusions.

thanks.

Thank you, Aravind, for another excellent post.

Follow-up reports aren't always more complete than the original reports. For instance, in your last example, the original report includes several symptoms that are not in the follow-up report, and at least some of these symptoms appear in the write-up in both reports. There is also information in the original report on other medications, current illness, pre-existing conditions, and allergies that doesn't appear in the follow-up report.

One way to summarize this might be to say that follow-up reports generally have more information than original reports but sometimes may lack information included in the original reports, too.

I wonder how much of these differences is due errors the reporter makes and how much might be due to VAERS staff "editing" reports. Clearly, the VAERS staff make coding errors in not capturing symptoms that appear in the write-up. Another question is why VAERS staff have to delete follow-up or duplicate reports for a given case if they are applying the supposed rule of including only the first report for a case. Altogether, these kinds of errors suggest incompetent administration/execution of the VAERS program. Because these errors don't show a _consistent_ bias toward lessening the apparent negative impact of vaccines, incompetence seems to be more relevant than malicious intent.