What do the VAERS autism reports ACTUALLY show?

Quantifying the impact of litigation related reports

Summary:

My analysis challenges a 2006 paper about VAERS autism reports by using LLMs to “read” the clinical narratives, demonstrating that the percentage of litigation-related reports actually decreased after 2003 (study completion date), contrary to the paper's predictions about increasing trends.

Examining vaccination dates instead of report dates shows that many reports from the study period were "catch-up" filings from earlier years, probably triggered by class action lawsuits after parents were initially discouraged from reporting vaccine-autism connections.

Testing revealed the original paper's keyword-based method missed ~45% of litigation-related reports that AI models could identify, demonstrating significant limitations in the paper's methodology.

Using multiple AI language models enables deeper analysis of VAERS report narratives than traditional keyword searches, suggesting a promising new approach for vaccine safety research.

Alternative approaches could have achieved similar insights without advanced AI - including manual review teams, MTurk analysis, or Kaggle competitions - demonstrating how public health agencies could have maintained higher research standards

President elect Trump recently posted this on Truth Social

I haven’t yet seen anyone from the Science Based Medicine website suggest the public health agencies already represent Gold Standard Scientific Research1 but I want to present some data analysis which shows just how far these agencies have fallen.

David Gorski wrote this in late 2021 even though by then there was an explosion of VAERS reports related to the COVID19 vaccines.

I then looked at the data and did some preliminary analysis which demonstrated that this did not matter anyway for the post-COVID19 era because litigation related reports were only a very small fraction of all VAERS reports by 2019.

Is litigation a source of over-reporting for COVID19 vaccines?

This is Part 2 of my Case for Vaccine Data Science series.

When I wrote that paper, I did not have a copy of the original paper because it was behind a paywall.

As you can imagine, if someone cites a supposedly important paper which is behind a paywall, it becomes a pretty effective way to slow down independent research.

I eventually got a copy of the paper and I will now present some analysis to show why David Gorski’s conclusions are wrong even if you only consider what the paper published.

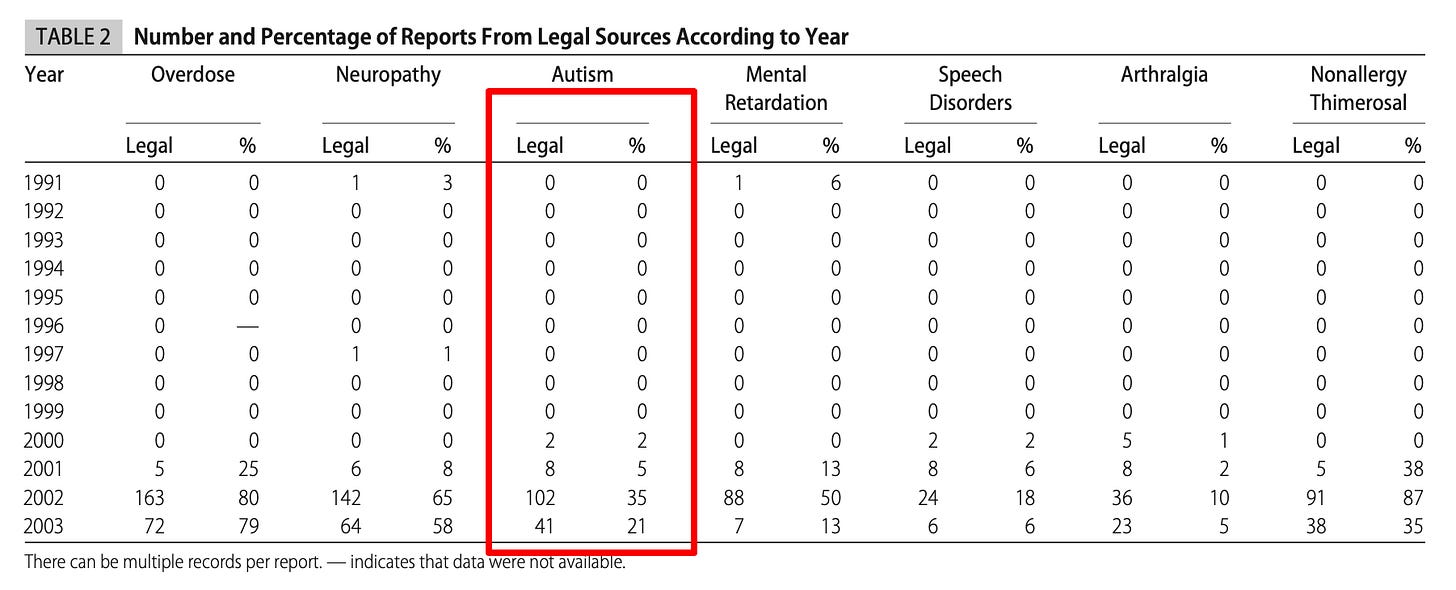

Here is the main idea as it relates to autism - the authors looked at VAERS reports from 1990 to 2003 where one of the symptoms was “Autism”, and then looked for keywords in the write up to try and figure out if they were related to litigation.

Then they plotted these numbers by year, and showed that there was a dramatic increase (as a percentage of total reports) in litigation related reports for a few years leading up to 2003, the study end date.

Based on this the paper came to the following conclusion

For the conditions reviewed here, it is apparent that a large enough percentage of reports are being made related to litigation that failure to exclude these will seriously skew trends. This is important for vaccines that contain thimerosal, and specifically for the MMR vaccine because of the controversy surrounding its relationship to autism. It therefore is incumbent on the authors who use VAERS data to provide detailed methods sections that describe their inclusion and exclusion criteria. To that end, we are making our SAS code available to interested parties. It is not sufficient simply to reference extraction of the VAERS data set.

However, the authors are wrong about the trend they thought they discovered.

Extracting the litigation status and earliest year of symptom onset

It is possible to write some Python code to ask an LLM like ChatGPT to “read” a VAERS writeup and figure out if the report is related to litigation. In the same way, you can also ask it to read the writeup and guess the earliest date of symptom onset.

I used four different LLMs to do this task - OpenAI’s GPT4, Google’s Gemini Pro, Anthropic’s Claude Sonnet and Twitter’s xAI grok2.

This is what the code would look like for GPT4

import pandas as pd

import time

import json

import os

from openai import OpenAI

from pydantic import BaseModel

from dotenv import load_dotenv

load_dotenv()

api_key = os.getenv('GPT_API_KEY')

client = OpenAI(api_key=api_key)

class Explanation(BaseModel):

matching_phrase_if_any: str

matching_sentence_if_any: str

explanation: str

class PatientInfo(BaseModel):

year_of_earliest_symptom_onset: int

year_of_earliest_symptom_onset_explanation: Explanation

related_to_litigation: bool

related_to_litigation_explanation: Explanation

df: pd.DataFrame = pd.read_csv(f'csv/autism.csv')

file_name = 'gpt_autism_litigation_map.json'

with open(f'json/gpt/{file_name}', 'r') as f:

gpt_autism_litigation_map = json.load(f)

num_rows = len(df)

for index, row in df.head(num_rows).iterrows():

try:

start_time = time.time()

vaers_id = str(row['VAERS_ID'])

if vaers_id in gpt_autism_litigation_map.keys():

print(f'Skipped {vaers_id}')

continue

symptom_text = row['SYMPTOM_TEXT']

print(f'Index = {index} Processing {vaers_id} Length = {len(symptom_text)}')

completion = client.beta.chat.completions.parse(

model="gpt-4o-2024-08-06",

messages=[

{"role": "system",

"content": "You are a biomedical expert. If the value is not available, use 'Unknown' for string and -1 for int. If there is no matching sentence, leave the field empty. If there is no matching phrase, leave the field empty. "},

{"role": "user",

"content": f'''

Writeup:

{symptom_text}

'''},

],

response_format=PatientInfo,

)

end_time = time.time()

duration = end_time - start_time

result = completion.choices[0].message.content

response = json.loads(result)

response['duration'] = duration

gpt_autism_litigation_map[vaers_id] = response

if index % 10 == 0:

with open(f'json/gpt/{file_name}', 'w+') as f:

json.dump(gpt_autism_litigation_map, f, indent=2)

except Exception as e:

print(e)

with open(f'json/gpt/{file_name}', 'w+') as f:

json.dump(gpt_autism_litigation_map, f, indent=2)

I wrote similar code for all the LLMs and then saved the results to respective files.

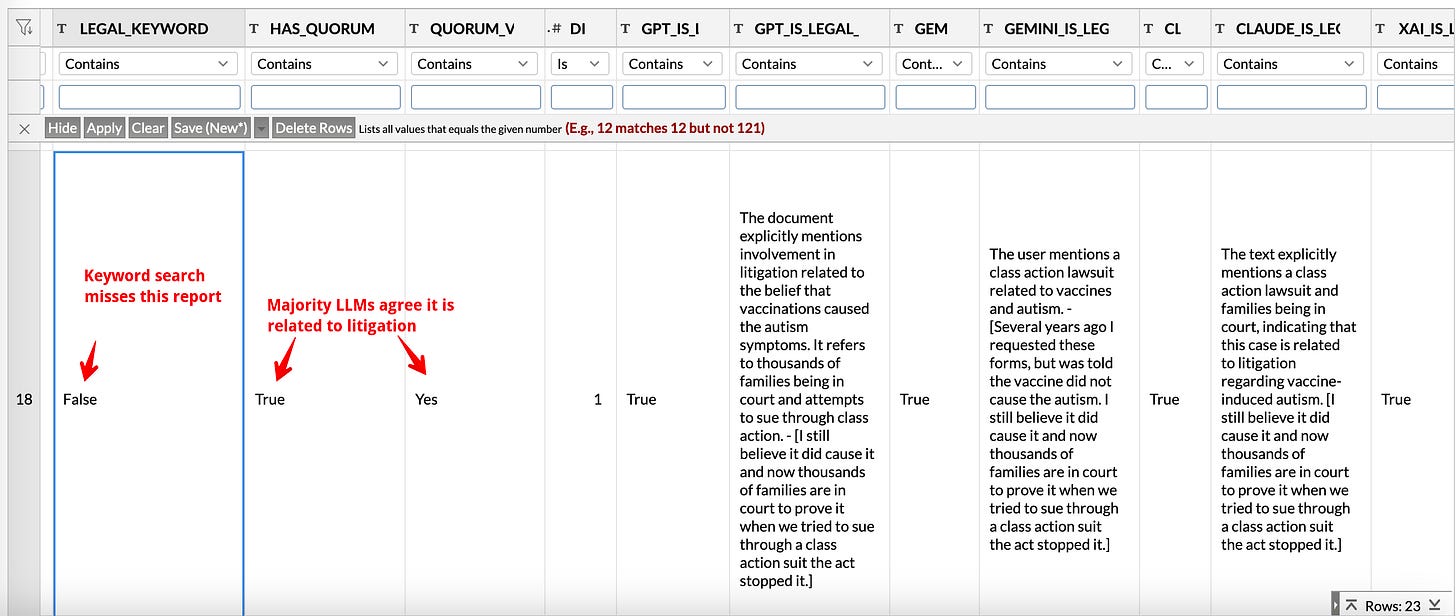

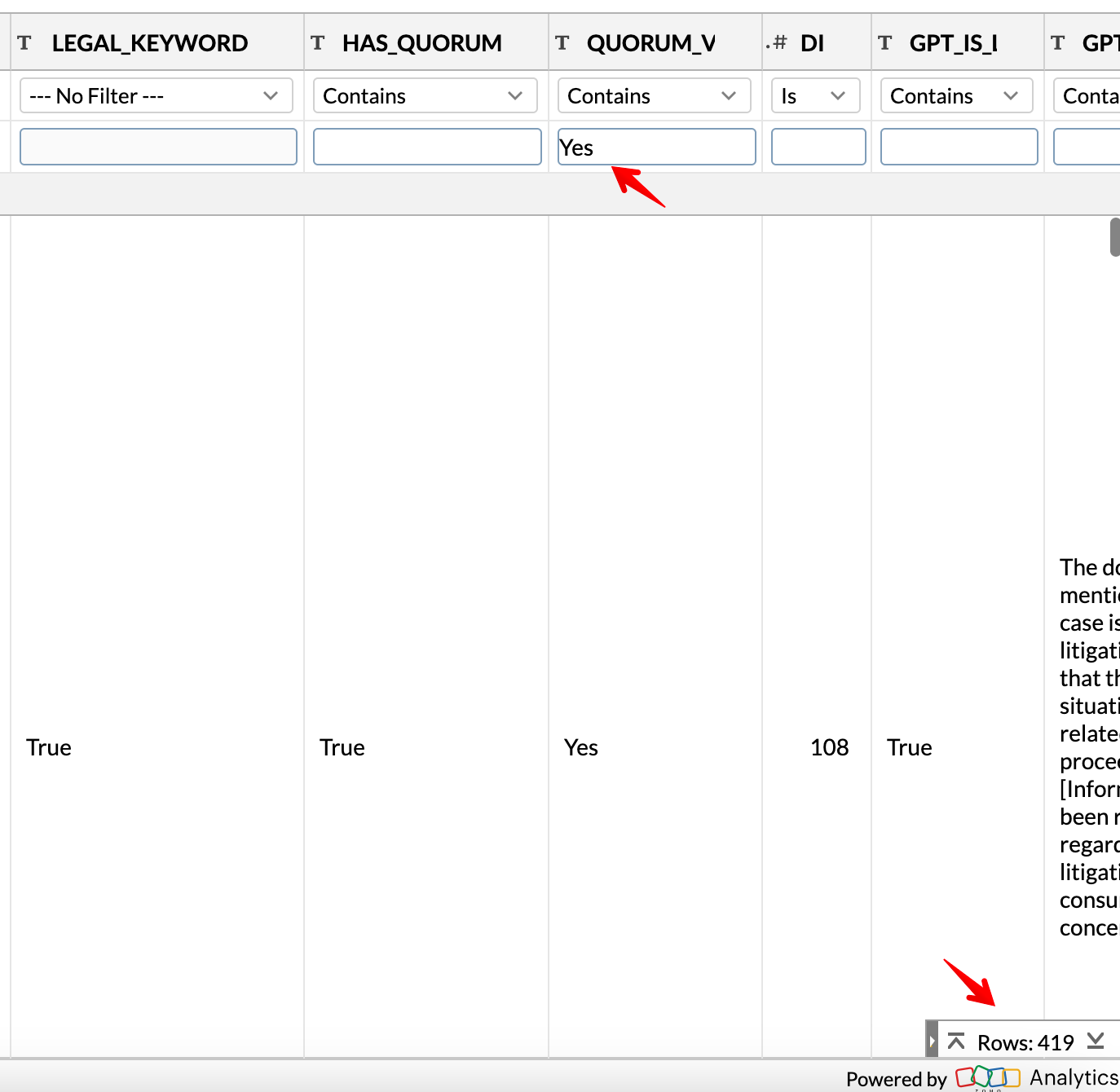

Then I took the saved results from these four files and compiled a new dataset which includes the following information (think of the quorum as a vote):

LEGAL_KEYWORD - if there is a keyword match (this is based on the original paper’s methodology) for litigation related keywords

HAS_QUORUM - if three or more LLMs agree3 on whether a report was related to litigation

QUORUM_VAL - this value is Yes if three or more LLMs agree on Yes, No if three or more LLMs agree on No, and Maybe if there is a split vote

MIN_YEAR - year of earliest symptom onset. I use a default value of 1900 and then calculate the earliest value based on the VAX_DATE from the report itself, as well as the write up (if vaccination date is not mentioned). If the value is still 1900 that means the VAX_DATE field is empty, and there isn’t any information in the writeup to infer the year of earliest symptom onset

In my opinion the authors make three mistakes.

Litigation report trends did not persist after 2003

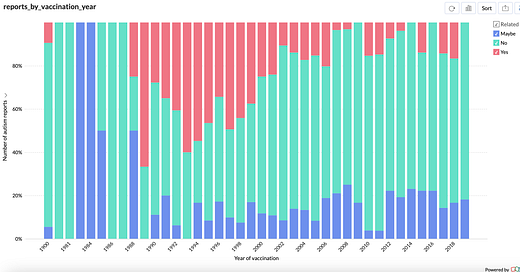

I plotted the trend of litigation related reports as a percentage of total number of reports by RECVDATE (the year report was first added to VAERS)

The red bars are reports related to litigation as inferred by the LLMs. The red line is an extrapolated trend line (that I added) for the percentage of autism reports you might expect to be litigation related. As you can see, the actual percentages (red bars) were quite high until 2007, but started getting smaller and smaller over time.

In other words, the trend did not continue for much longer after the paper was published, and it was certainly not true by 2021 when David Gorski wrote those tweets.

Litigation report trends look very different when plotted by vaccination year

Next I plotted litigation related reports versus the year of vaccination. Remember that this information is often missing for litigation related reports, so I had to use the LLMs to extract this information.

You can immediately notice that this trend looks very different and probably the reverse of what the authors claimed.

When plotted over the year of vaccination, the percentage of litigation related reports (red bars) seem to uniformly decrease over the same time period.

In other words, when you place it into the proper context - a lot of autism reports for the years 2002 till around 2008 were catch up reports, which were probably people who were being asked to file VAERS reports to complete some kind of a process.

Do litigation reports represent “over reporting”?

This leads us to the next question - what caused the glut of reports between 2002 - 2008? Could there be something else going on here?

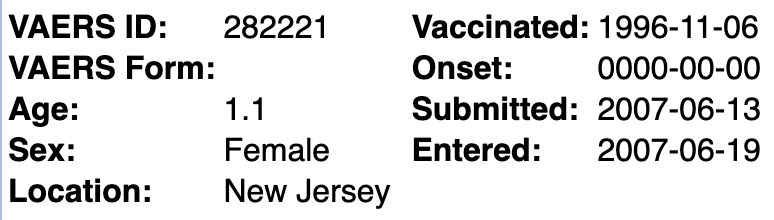

I think the answer is in one of the reports which

a) the Goodman paper did NOT recognize as being related to litigation. In other words, they did not use the necessary keywords and would have missed this report which seems to be part of a class action lawsuit.

b) the LLMs DID recognize as being related to litigation

c) specifically mentions that some parents filed VAERS reports after a long delay because they were initially told that the vaccines are not related to autism

Writeup:

Autism. Several years ago I requested these forms, but was told the vaccine did not cause the autism. I still believe it did cause it and now thousands of families are in court to prove it when we tried to sue through a class action suit the act stopped it. Now I just want to make sure my child is registered with VAERS and included in any compensation to help her get through life. Patient is a 4year 10month old female who has been diagnosed with Pervasive Developmental Disorder. At present her mother has requested that patient receive no more immunizations due to the literature suggesting linkage between immunizations and PDD/autism. Attached you will find patient immunization record to present and her rubeola titer which shows that she is immune. Patient's history suggests that she was born normally and began developmental changes after reactions she had following immunizations. These unexplained reactions included high fevers, and excessive diarrhea. After her immunizations given at one year, patient stopped using whatever words had been learned to that point, "skated" between furniture, became a fussy eater, irritable and "zoned out". Her developmental delays became more noticeable. She finally began walking at age 20 months. Patient is allergic to gluten. At present she is on a gluten free diet (no wheat) and a dairy free diet and is doing very well. I have been unable to ascertain if any vaccines are gluten free. I am trying to check through the manufacturing companies, as the pharmacist is unable to tell all the ingredients. Due to patient's neurologically impaired and developmental delayed condition, and her allergy to gluten, I would advise at this time that she receive no more vaccines and stay on her gluten free and dairy free diet. 6/20/2007 VAERS report received with records attached. Records acknowledge child with DX of Pervasive Developmental Disorder/ Austistic Spectrum Disorder. PMH: Gluten allergy. Infant had unexplained high fevers and diarrhea following vax. Child stopped speaking words after 1 year vax, and became a fussy eater, irritable, and appeared to zone out. Neuro eval describes hand flapping and rocking in place behaviors. PE revealed a wide-based gait and mild global hypotonia. Child demonstrates delays is both receptive and expressive language skills. Follow-up: Autism. 1/11/2010 Permanently "Autistic".

Notice that the vaccination was in 1996, but the report was only filed in 2007

Could this be the reason for the backlog?

How many litigation reports did the Goodman paper miss?

This leads us to another question.

The Goodman paper used simple keyword search to find litigation related reports. How many did it miss?

There are a total of 419 litigation related reports as identified by the LLMs (QUORUM_VAL = Yes)

Of these the keyword approach used by the Goodman paper missed 194 (over 45%)

There are 220 “maybes” according to the LLMs

As you might expect, the keyword approach missed all of them.

As a sanity check, I checked to see if there were any reports the Quorum decided on “No” but the keyword search marked as True. As expected, there weren’t any such reports.

What Gold Standard Medical Research would look like

Someone might read this and say “Well, you were only able to do this because you had access to LLM APIs in 2024”

But there are a LOT of partial solutions that the regulatory agencies could have implemented if they really wanted to get to the bottom of this topic:

a) There are only 1800 autism reports for the period 1990 - 2019. They could have assembled a team of 10 people to read 10 reports a day and done the entire analysis in under a month

b) VAERS data lies in the public domain. They could have used a service like MTurk to process the reports and ask for the real vaccination date and whether it was related to litigation. It would have probably cost them less than a few hundred dollars.

c) The CDC could have published the Autism reports alone as a free Kaggle competition and asked the Machine Learning (ML) community to come up with ways to extract relevant information. This would have also increased vaccine injury awareness4 among the ML community.

Conclusion

There is a lot of information you can extract from VAERS writeups by using some Python code and paying for access to the Large Language Model APIs.

This does incur some cost, but I think the RoI on this investment would be very high if someone takes up this project in a systematic way.

I think even they realize their readers are not THAT stupid :-)

The original plan was to only use three LLMs, but Twitter is providing $25 free credit per month to promote their AI till end of this year, so I added it. The results have been mixed, but it is quite decent.

Note: This includes when they all agree that the report is NOT related to litigation

I have mentioned this so many times on this website, but I do think this lack of awareness made the situation a whole lot worse. “My main takeaway from writing this series of articles is that people who are doing VAERS analysis should be using more data science principles, and there definitely has to be a lot more work done on text analysis of VAERS writeups.“

Thank you for this analysis and all your hard work.