"The causal relationship between BNT162B2 and the events cannot be excluded"

Can we infer causation from narrative text?

This can be considered the 2nd part of the article I published yesterday.

In the previous article, I mentioned that there are a lot of reports where the narrative text comprises three distinct pieces of information which when combined indicates that the vaccine could have caused the adverse reaction:

a) the patient did not test positive for COVID-19 after vaccination

b) the patient has never had COVID-19 symptoms

c) the reporter is contactable, making it less likely to be a prank report. A reader also added this in the comments:

Pranksters are taking great risks to prank to joke in that it considered a felony to file a false report aka "federal crime". More over if you've ever filed a report you will quickly notice they want to know everything about the submitter just short of your ss#. Your address, tel#, relationship to patient, at the very least they have would also have an IP address to the submitter? 2) They have a very reasonable and comfortable "up to" 4-6wks to authenticate and request additional info if needed, before finalizing and publishing report.

While these data points are independently making a reasonable case (I hope), there is actually another group of reports which make a much more compelling case.

These are the reports where someone has reviewed the adverse reaction (usually a healthcare professional) and has concluded that the vaccination date and the adverse reaction are close enough that there could be a causal relationship.

So in this case, I start with a dataset where I took all the foreign reports and filtered them down to the ones which have the words “temporal” and “excluded” in the SYMPTOM_TEXT (the narrative text) field.

Within that, I split the report into individual sentences, and then select those where a single sentence contains both the words “temporal” and “excluded”. This eliminates those reports where these two words just happen to be in the report but are not really in the same sentence.

for sent in doc.sents:

stext = sent.text

sltext = stext.lower()

if 'excluded' in sltext and 'temporal' in sltext:

df.at[index, 'TEMPORAL'] = stextNext, I use the spaCy DependencyMatcher to try and extract the person who made the report. While this does not always successfully identify the reporter, it has a very high hit rate.

The rest of the fields are very similar to my previous article.

The new fields added to this report

These are the two new fields added to this report:

TEMPORAL: This is the sentence which has both the words ‘temporal’ and ‘excluded’

REPORTED_BY: While this is the same column name, here I have made a few important changes.

First I check if the sentence contains the word ‘report’ and the word ‘regulatory authority’.

Then I check if the sentence contains the word ‘report’ and the word ‘contactable’.

If neither of these are true, then I check for specific patterns based on a DependencyParse match.

# stext = sentence text

# sltext = lower cased version of sentence text

if 'report' in sltext:

if 'regulatory authority' in sltext:

if not added_reported_by:

df.at[index, 'REPORTED_BY'] = stext

added_reported_by = True

elif 'contactable' in sltext:

if not added_reported_by:

df.at[index, 'REPORTED_BY'] = stext

added_reported_by = True

else:

sent_doc = nlp(stext)

matches = matcher_spontaneous_report(sent_doc)

if len(matches) > 0:

if not added_reported_by:

df.at[index, 'REPORTED_BY'] = stext

added_reported_by = True

else:

matches = matcher_received_report(sent_doc)

if len(matches) > 0:

if not added_reported_by:

df.at[index, 'REPORTED_BY'] = stext

added_reported_by = True

else:

matches = matcher_report_received(sent_doc)

if len(matches) > 0:

if not added_reported_by:

df.at[index, 'REPORTED_BY'] = stext

added_reported_by = True(Yes, the above code is not very optimal, there are many ways to improve it).

Specifically, I look for the following three patterns:

name_spontaneous_report = 'report(spontaneous)(from)'

pattern_spontaneous_report = [

# anchor token: report

{

"RIGHT_ID": "report",

"RIGHT_ATTRS": {"ORTH": "report"}

},

# report -> spontaneous

{

"LEFT_ID": "report",

"REL_OP": ">",

"RIGHT_ID": "spontaneous",

"RIGHT_ATTRS": {"ORTH": "spontaneous"}

},

# report -> from

{

"LEFT_ID": "report",

"REL_OP": ">",

"RIGHT_ID": "from",

"RIGHT_ATTRS": {"ORTH": "from"}

}

]

matcher_spontaneous_report = DependencyMatcher(nlp.vocab)

matcher_spontaneous_report.add(name_spontaneous_report, [pattern_spontaneous_report])name_received_report = 'received(report)(from)'

pattern_received_report = [

# anchor token: received

{

"RIGHT_ID": "received",

"RIGHT_ATTRS": {"ORTH": "received"}

},

# received -> report

{

"LEFT_ID": "received",

"REL_OP": ">",

"RIGHT_ID": "report",

"RIGHT_ATTRS": {"ORTH": "report"}

},

# received -> from

{

"LEFT_ID": "received",

"REL_OP": ">",

"RIGHT_ID": "from",

"RIGHT_ATTRS": {"ORTH": "from"}

}

]

matcher_received_report = DependencyMatcher(nlp.vocab)

matcher_spontaneous_report.add(name_received_report, [pattern_received_report])name_report_received = 'report(received(from)))'

pattern_report_received = [

# anchor token: report

{

"RIGHT_ID": "report",

"RIGHT_ATTRS": {"ORTH": "report"}

},

# report -> received

{

"LEFT_ID": "report",

"REL_OP": ">",

"RIGHT_ID": "received",

"RIGHT_ATTRS": {"ORTH": "received"}

},

# received -> from

{

"LEFT_ID": "received",

"REL_OP": ">",

"RIGHT_ID": "from",

"RIGHT_ATTRS": {"ORTH": "from"}

}

]

matcher_report_received = DependencyMatcher(nlp.vocab)

matcher_report_received.add(name_report_received, [pattern_report_received])I got 28239 rows which match the sentence pattern (both ‘temporal’ and ‘excluded’ in the same sentence).

Within those, I was able to identify the reporter for 26217 which is nearly 93% of the reports.

Who reported the adverse reaction?

Let us do a bit more analysis on who reported the adverse reaction.

As you have seen, a large majority of these TEMPORAL field has the text (paraphrasing):

"The causal relationship between BNT162B2 and the events cannot be excluded"

So if we can establish that the person who reported it is not a layperson, that should add a lot more credence to the causation analysis.

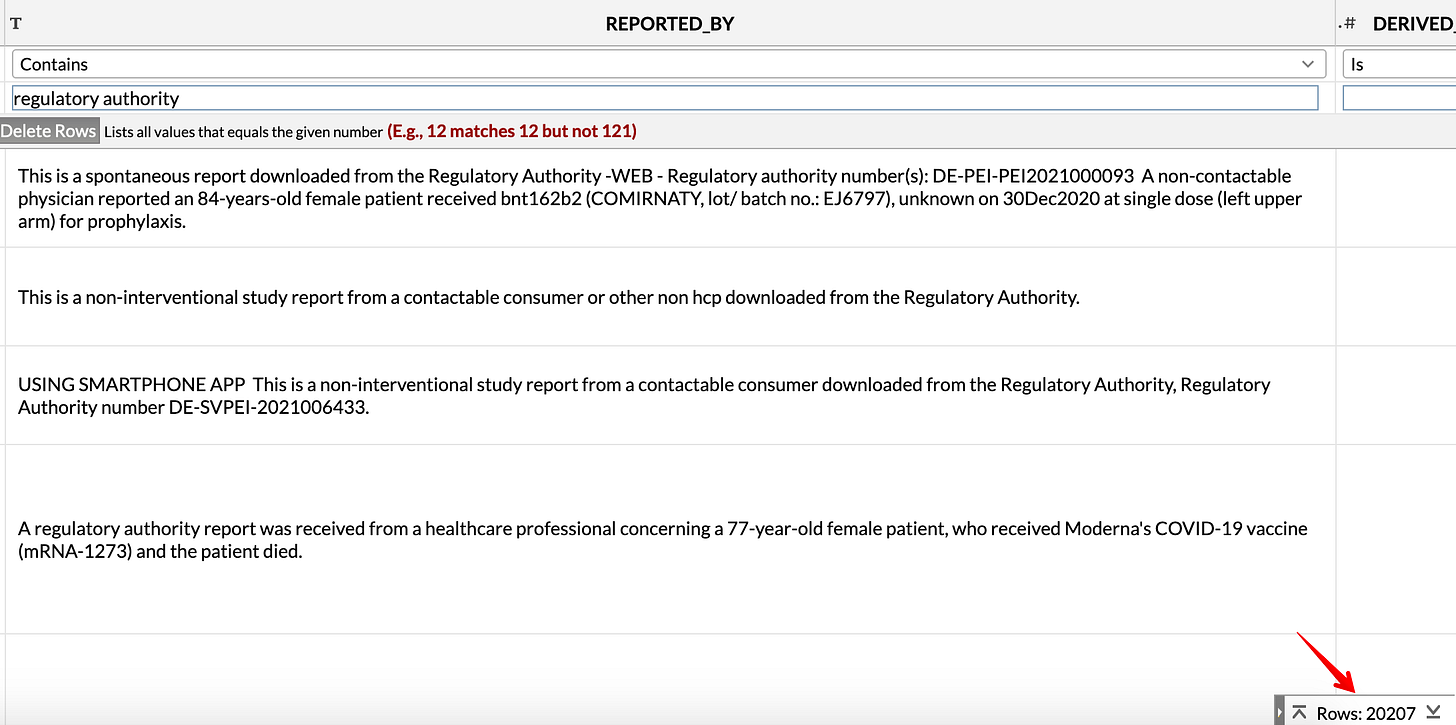

We can see that 20207 = over 70% of reports are downloaded from the “regulatory authority”.

There are another 3980 from health care professionals

In total, 20207 + 3980 = 24187, about 85% of the 28K+ reports can be considered to be reported or vetted by actual healthcare professionals.

Missing Age Values

To give you an idea of the amount of information which is available in the narrative text but is not translated into the corresponding column, let us consider the number of reports where AGE_YRS is empty but DERIVED_AGE is not.

6970 reports are missing the AGE_YRS field

Of these, we can infer the age from the narrative text for 3829 of them, that is over 50% of the missing ones.

Within this group, 2586 reports - nearly 2/3rds of them had a SYMPTOM_TEXT which was removed in the last 2 months (so I had to map the report to the older version of the CSV file to get the SYMPTOM_TEXT so I could do all this analysis).

First of all, it is bad enough that VAERS reports which hint that the vaccine caused an adverse reaction don’t even follow up to surface the age of the patient.

It is worse if the narrative text has this information but it is not translated to the corresponding field.

And it is much worse if the narrative text is also deleted so there isn’t any way to go back and verify if VAERS reports are internally consistent.

Why does it matter that the field is not translated?

It matters because the existence of untranslated fields means the corresponding reports will not show up in searches (for e.g. you can search by age on MedAlerts and OpenVAERS) and the summary reports which are usually cited by health authorities.

In fact, on first glance, it looks like almost none of the research papers which have done data mining analysis on VAERS (39 papers as of 29th Dec 2022) are aware of the existence of the SYMPTOM_TEXT field (2 papers as of 29th Dec 2022).

(Note: I obviously need to do a little more analysis for that last claim, a search result on Google Scholar might be a useful indicator of relative numbers, but is probably not the full picture)

See if you might be able to leverage some of this code:

https://deepdots.substack.com/p/overview-ai-fixed-150000-lot-numbers

Link at the top to technical then has a link to the inputs/outputs and in the inputs note the file with populated ages for example